Does Long Term Data Retention Matter In Observability? Users Say Yes.

A major inhibitor for longer data retention is cost, but this doesn’t need to be the case.

Observability Data Growth, Challenge, or Opportunity?

We’ve said it before, observability is fundamentally a data problem. You need to have the right data at the right time to effectively troubleshoot, and you often don’t know what that data will be ahead of time. If it was easy to always know what data you need in advance you’d have great reliability already. However, for most of us, we need to collect a lot of telemetry and that means observability data growth can start to look like a problem rather than an asset.

As your organization scales, so does your observability data. This leads to a few inevitable questions:

- What data is important/should be collected?

- How do we store and manage large volumes of data?

- How long should we keep observability data?

- At what point does the value of data begin to diminish?

Despite the looming questions, there are a myriad of reasons why you would want to keep more observability data around for longer. Aside from being able to look at your operations and their impact on line of business with more historical context, long-term retention is also useful for compliance-related auditing, security investigations, and the many other unforeseeable circumstances that can arise.

A major inhibitor for data retention is cost, but this doesn’t need to be the case. We believe keeping your data for 13 months or longer shouldn’t be unfathomable and if it sounds that way to you it probably means you’ve been paying too much to index and store your observability and monitoring data. Many organizations discard data solely because of cost concerns, but it is possible to keep actively searchable data longer without blowing your budget on ingestion and indexing.

Does Long-Term Retention Matter?

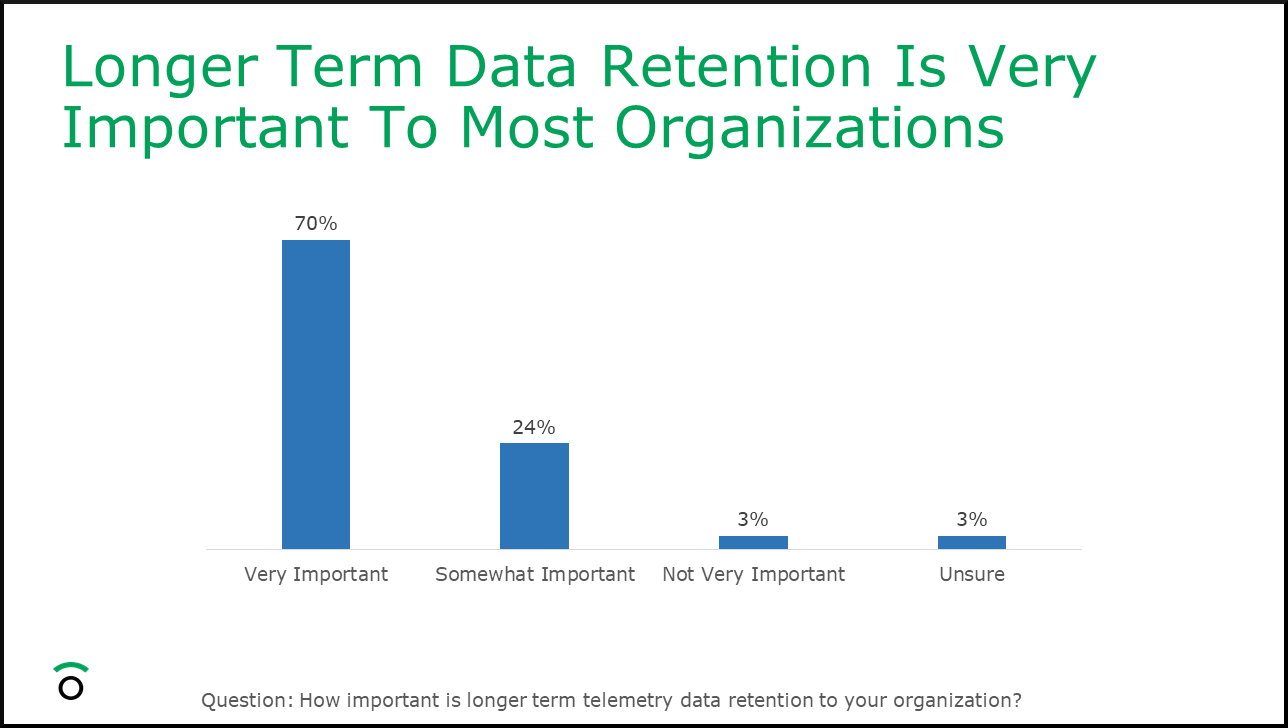

We wanted to find out if users also see the value in longer-term data retention. In our soon-to-be-released State of Observability 2022 report, we asked hundreds of observability users about various technologies and practices used within their organizations, as well as what challenges they’re facing along the way. Nearly all users (94%) indicated that longer-term retention is important to them and 70% said it’s very important.

Of course, having the most recent data is paramount in troubleshooting pursuits, but there are obvious downsides if you’re only keeping a few days or weeks of searchable data. So how long is long enough to keep telemetry around? It can be hard to know the exact answer until something happens that causes you to need the data, which is exactly why it’s worth giving your observability data longer shelf life. Not all observability data is going to be valuable one month or one year down the line, but you won’t always know what can or should be discarded from the outset. It’s typically better to have it and not need it than need it and not have it.

However, just because keeping data around longer is useful it isn’t always feasible so there has to be a good reason for keeping that data to ensure there is value in long-term storage. A year’s worth of logs can be crucial for auditing as regulatory compliance becomes increasingly critical. Your auditors will appreciate the historical data too in case it’s needed for any forensic work.

Indexing Makes Cost an Obstacle

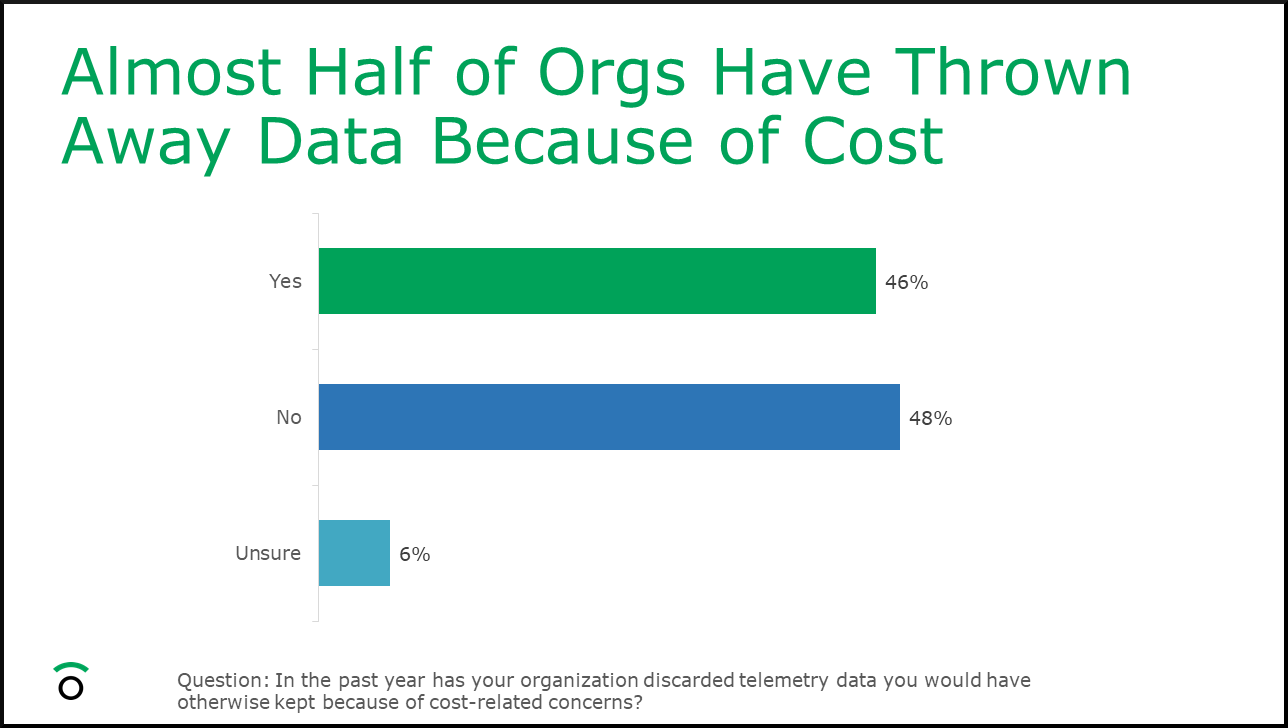

When we asked users if they’d discarded telemetry data in the past year due to cost concerns nearly half of the users said they had done so. Granted not all telemetry has significant historical value, but if you’re tossing out data strictly because you’re worried about cost, and not because the data has limited value then that’s a problem. Ideally, cost should not outweigh the desire to attain beneficial business outcomes.

Longer retention of telemetry is not a new concept, but the architectures and pricing models of some observability tools make it very difficult to justify keeping historical data. For tools that charge based on ingest volume, users often end up sampling their data heavily when the cost to index is high. Though a viable stop-gap, users may have unintentionally “sampled out” the very thing they’ll need later on. Additionally, the need to store raw data along with large volumes of index data also exacerbates the infrastructure costs of those tools. To avoid the cost of keeping a massive index, users inevitably opt to archive data sooner rather than keeping it searchable for longer.

Some organizations have begun using data pipelines to route more of their telemetry data to cold storage instead of their observability tools to save on indexing costs, but creating another data silo in the process. These types of tools can also result in valuable time spent on fine-tuning indexing policies to manage fresh and archived data stores. Observability shouldn’t be creating silos and toil, it should be reducing or eliminating those things.

Get More Value From Your Data

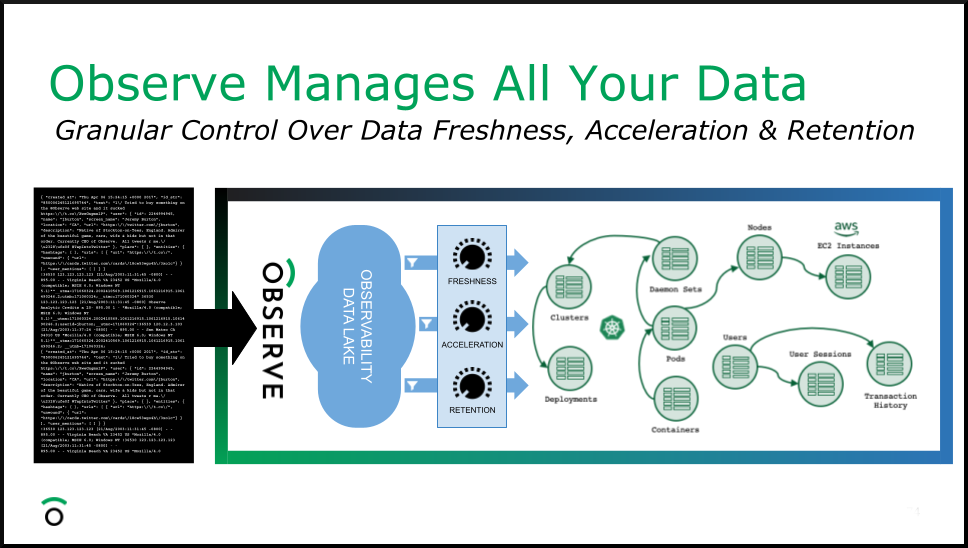

Observe uses a 13-month window by default, but customers can decide how long they want to keep their data. More data does not guarantee better insights, in fact, our State of Observability report showed that 50% of organizations ingesting between 501GB to 1TB of observability data per day cited investigation times of a few days or longer. Data is one part of the equation but you also need tooling that can break down silos and give context to your data so you get the most out of it.

Because Observe is built on Snowflake, which uses Amazon S3 as its native storage, that data is always going to be queryable no matter how old it is. You don’t need to rehydrate data from cold storage – paying even more ingest costs – so tools can search it, or spend countless hours attempting to devise a foolproof data-tiering scheme and architecture. Observe provides customers with dials to tune things like query speed and freshness of data, but handles the management of data in a uniform way, transparently to the user.

If keeping data longer and breaking down silos sounds useful to you and you or your company wants to take your observability strategy to the next level, we encourage you to view our demo of Observe in action.